As part of the efforts to integrate more biologically inspired mechanisms into high performing and highly efficient machine learning models, the chair investigates hybrid methods to inherit the best of both worlds. One example is the encoding and training of conventional ANNs into their spiking counterpart. The energy efficiency is realized in unique and creative new computing paradigms, e.g., spike latency encoding of NN activations, time-domain computing or more fundamentally simulating biological neural network models.

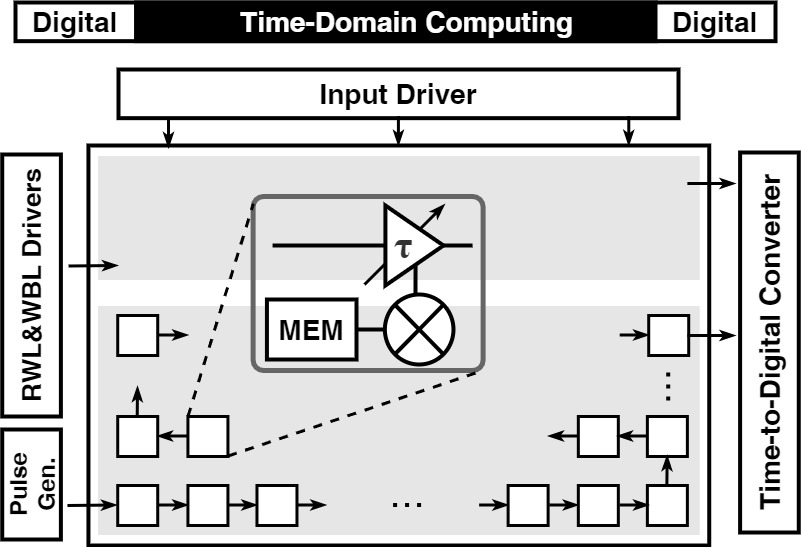

Conventional digital computing based on Boolean logic is reaching an energy bottleneck, and analog computing has limitations in technology scaling. To overcome these challenges, we are exploring new methods that use time as an information carrier and encode numeric values as the relative distances between discrete arrival times. By leveraging the inherent delay characteristics of the circuit, we target better energy-efficient while preserving the robustness digital computing.

Our research has demonstrated the potential of time-domain computing in standard digital design flows, as well as in mixed-signal approaches for emerging machine learning applications. We are pushing the boundaries of this exciting field by exploring the intersection of time-domain computing and compute-in-memory. The goal is to develop novel computing architectures that can overcome the energy limitations of conventional computing methods and enable the development of more efficient systems. This also takes modifications on the algorithmic level into account lead to the algorithm-hardware co-design.

[J. Lou et al., “An All-Digital Time-Domain Compute-in-Memory Engine for Binary Neural Networks with Beyond POPS/W Energy Efficiency,” ESSCIRC, 2022, doi: 10.1109/ESSCIRC55480.2022.9911382 , pdf]

[F. Freye et al., “Memristive Devices for Time Domain Compute-in-Memory,” IEEE J. on Exploratory Solid-State Computational Devices and Circuits, 2022, doi: 10.1109/JXCDC.2022.3217098 , pdf]

[J. Lou et al., “Scalable Time-Domain Compute-in-Memory BNN Engine with 2.06 POPS/W Energy Efficiency for Edge-AI Devices,” GLSVLSI, 2023, doi: 10.1145/3583781.3590220 , pdf]

[J. Lou et al., “An Energy Efficient All-Digital Time-Domain Compute-in-Memory Macro Optimized for Binary Neural Networks,” IEEE Transactions on Circuits and Systems: Regular Papers, 2023, doi: 10.1109/TCSI.2023.3323205 , pdf]

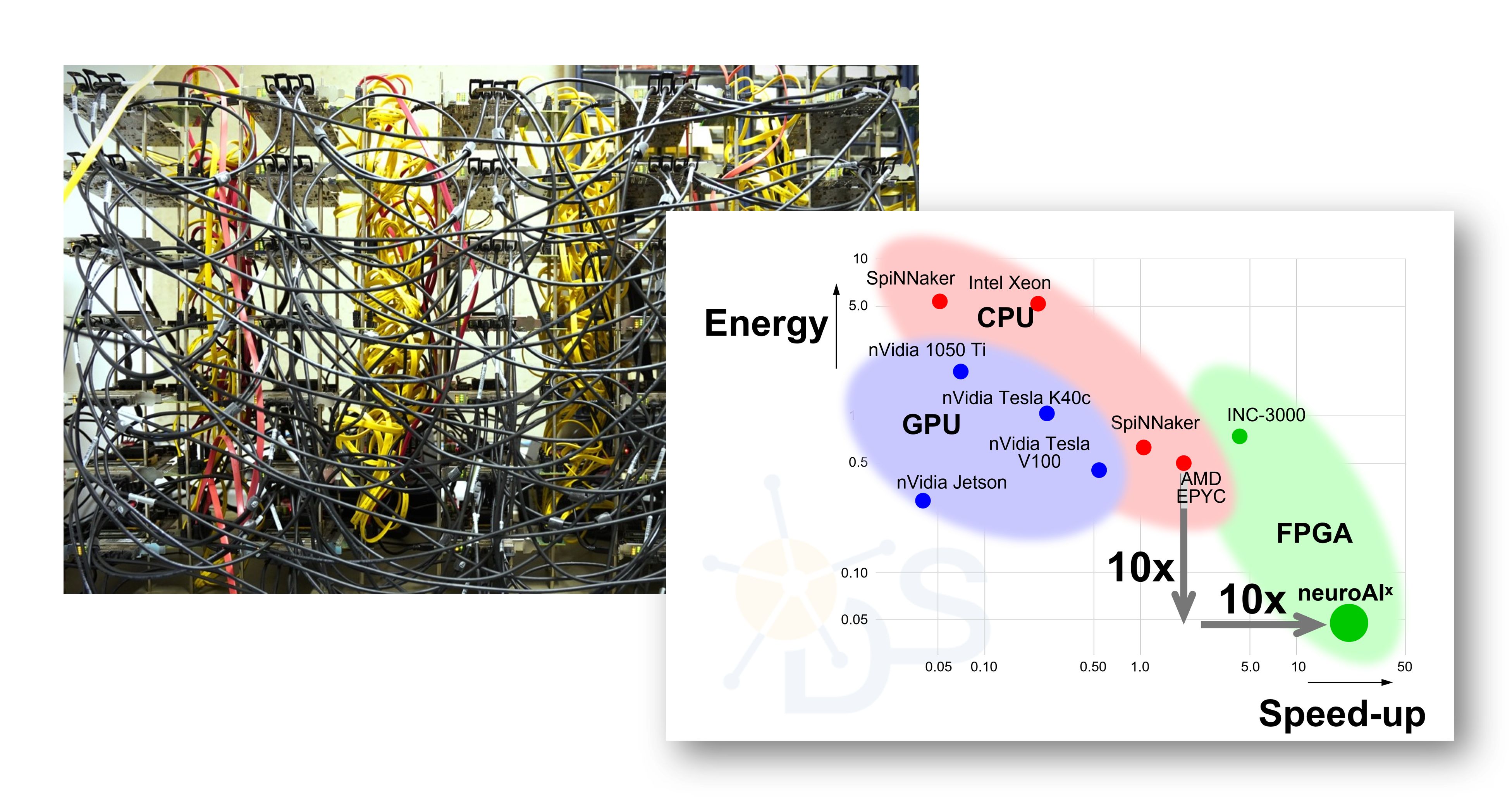

neuroAIˣ

Our team has embarked on an exciting project that involves developing an innovative system for simulating biological neural networks. Our goal is to gain a deeper understanding of the human brain and use that knowledge to advance the field of artificial intelligence. To achieve this, we're leveraging cutting-edge computer architecture, hardware synthesis, embedded programming, driver development, and high-frequency data transfer technologies. We also bring a wealth of knowledge and expertise from a diverse range of backgrounds, including neuroscience. But perhaps our most exciting achievement so far is breaking the world record for simulating the "cortical microcircuit" at an incredible 20x acceleration speed. This breakthrough has the potential to accelerate research into how the brain processes information and learns, unlocking new possibilities for the future of AI.

Join us on this fascinating journey as we explore the intricacies of the human brain and work towards a better understanding of how it functions.

[K. Kauth et al., “neuroAIx-Framework: design of future neuroscience simulation systems exhibiting execution of the cortical microcircuit model 20× faster than biological real-time,” Frontiers in Comput. Neurosci., 2023, doi: 10.3389/fncom.2023.1144143]

[V. Sobhani et al., “A Digital Twin Network for Computational Neuroscience Simulators: Exploring Network Architectures for Acceleration of Biological Neural Network Simulations,” WoWMoM, 2023, doi: 10.1109/WoWMoM57956.2023.00084 , pdf]

[V. Sobhani et al., “Deadlock-Freedom in Computational Neuroscience Simulators,” IEEE Design & Test, 2022, doi: 10.1109/MDAT.2022.3204199 , pdf]

[K. Kauth, et al., “Communication Architecture Enabling 100x Accelerated Simulation of Biological Neural Networks,” SLIPˆ2, 2020 , pdf]